Neural Network

This example runs a neural network in inference against the MNIST dataset. The folder contains the following folders:

python/contains the serialized trained model (using ONNX), the script to train it, and the script to download the test setproto/contains cpp protobuf parsers to read ONNX modelsgroundTruth/contains the network non-HiCR implementation used as a ground truthsource/contains the different variants of this example corresponding to different backendsacl.cppcorresponds to the ACL backend implementation. The available kernels are compiled for Huawei 910. Runkernels/compile-acl.shto call ATC and compile the kernel.opencl.cppcorresponds to the OpenCL backend implementation. The available kernels are compiled for OpenCL.pthreads.cppcorresponds to the Pthreads backend implementation

The program follows the usual initialization analyzed alredy in the Kernel Execution example. After that, all the data regarding the neural network are loaded, such as the weights and the test set. Then, the neural network is created and it’s feeded with an image of the dataset at time.

Neural network configuration

The neural network is created and the corresponding weights are loaded. The input image is loaded as well.

// Create the neural network

auto neuralNetwork = NeuralNetwork(...);

// ONNX trained model

auto model = ...;

// Load data of the pre-trained model

neuralNetwork.loadPreTrainedData(model, hostMemorySpace);

// Create local memory slot holding the tensor image

auto imageTensor = loadImage(imageFilePath, ...);

Inference

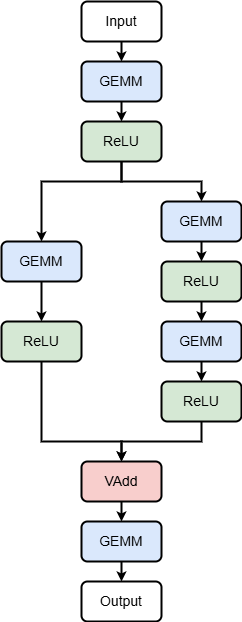

The network performs the inference following the architecture depicted in Neural Network Architecture, the prediction is extracted and compared with the one provided by the data set

Fig. 3 Neural Network Architecture

// Returns a memory slot with the predictions

const auto output = neuralNetwork.forward(imageTensor);

// Get the expected prediction

auto desiredPrediction = labels[i];

// Get the actual prediction

auto actualPrediction = neuralNetwork.getPrediction(output);

// Register failures

if (desiredPrediction != actualPrediction) { failures++; }

The expected result depends on how many images are feeded to the network:

# Output with 300 images (configured as test in meson)

Analyzed images: 100/10000

Analyzed images: 200/10000

Total failures: 10/300

# Output with 10K images (entire MNIST test set, used for the HiCR paper)

Analyzed images: 100/10000

Analyzed images: 200/10000

...

Analyzed images: 9800/10000

Analyzed images: 9900/10000

Total failures: 526/10000